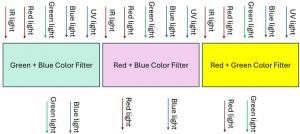

Color camera sensors often operate by having a 2×2 pattern of filters placed in front of every block of monochrome pixels in the sensor (often duplicating green to get four colors). This is shown in the figure below, where each filter rejects all but a single frequency range of light. There is smooth fall-off between […]